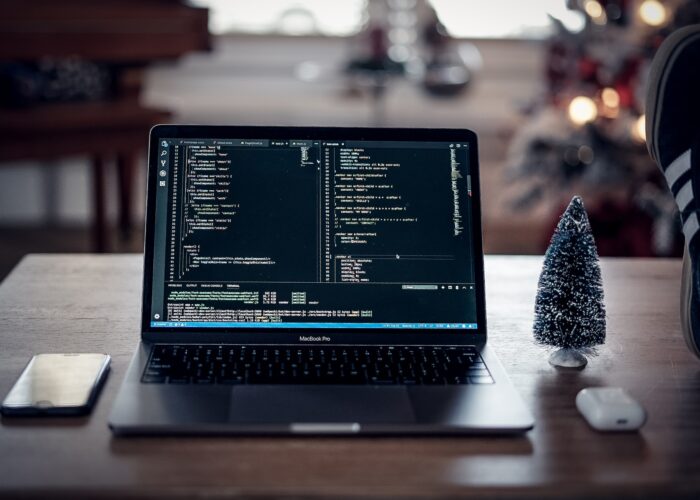

Java Development

Java – the most common programming language, it is not difficult to learn, so it is suitable for those who first approached the study of programming.

![]()

Java Basic

Introduction Java course is designed for those who are just starting their way in the IT industry and have no idea about the basics of programming.

![]()

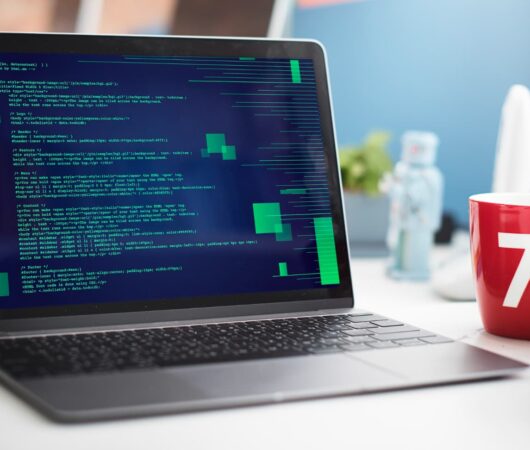

Java Pro

During the course, students will learn to create Java applications and gain an understanding of OOP principles. This course is designed for people with basic knowledge in any C-like programming language.

![]()

Java Enterprise

In the process of learning Java Enterprise students will master the EE-technology stack, which is used to create business-level applications and services, which will allow them to become more in demand in the modern IT market.

Our Courses

Java programming course will help you take a comprehensive approach to learning the language and qualitatively master its syntax, capabilities, tools for writing strong projects.

Java Enterprise

Advanced Level

Java Basic

Our Development Companions

This thorough approach positions Avenga as a software testing services and a partner committed to excellence in testing solutions.

Orangesoft offers comprehensive expertise on how to build a telemedicine system, delivering secure, user-friendly, and scalable solutions for modern healthcare needs.

Kodjin FHIR Server solutions – your choice to comply with HL7

Innowise, a financial software development company, specializes in creating custom financial solutions, blending innovative design with cutting-edge technology to enhance efficiency and user satisfaction in the finance sector.

Boost your website’s visibility and traffic with Doktor Seo expert advice. Discover game-changing SEO insights at https://drseo.blog/!

To efficiently load data into your database, you can use the SQL import CSV feature for quick and seamless integration.

GetDevDone provides pixel-perfect PSD to HTML conversion services. Dedicated project manager, meticulous QA testing, and timely delivery.

By choosing Syndicode as a reliable Node.js development provider, you will get a secure, stable solution and scalable product for any niche. Collaborate with the best!

Join our “Generative AI Adoption for Engineering Teams” course to learn how to enhance product engineering with GenAI. This program provides practical insights on integrating generative AI into your workflows, focusing on advancing product engineering with GenAI.

Topflight is an award-winning medical app developer. Read this guide about healthcare app development. You will learn more about development steps, mush-have features, budget, and monetization models.

Years Experience

Teachers

Students

Projects

Why Choose our School

We teach only practicing specialists from top IT companies.

The teacher devotes time to each student.

Effective and convenient training.

Urgent issues - timely solution.

Sign Up for Our School

Our Blog

Find out about the latest promotions and discounts, company vacancies, upcoming events and more.

Top FHIR Use Cases in Healthcare Today

Fast Healthcare Interoperability Resources (FHIR) has emerged as a pivotal standard in healthcare, revolutionizing the way data is exchanged and utilized across

Top Reasons Healthcare Providers Should Adopt FHIR

Java for Blogging: Pros and Cons Explained

Java is a versatile, high-level programming language that has been a game-changer for developers since its inception in the mid-90s. Created by

Java vs. WordPress for Enterprise Web Development

When it comes to enterprise web development, choosing the right platform can be a game-changer. Two prominent options are Java and WordPress,

Why learn Java in 2024?

Java remains one of the most popular programming languages in the world. Its versatility and robustness make it a preferred choice for

The Enduring Relevance of Java in Custom Software Development

In the ever-evolving world of software development, programming languages come and go. However, some, like Java, have stood the test of time

Logitech Wireless Gamepad F710 Review

Continuing our favorite blog topic, we are going to talk about cool gaming accessories. In search of something really interesting, we decided

Innovation Horizons: A Journey Through the Frontiers of Information Technology

Introduction:

Welcome to the vanguard of innovation, where the heartbeat of tomorrow resonates within the sphere of Information Technology

The Impact of AI on Tech Evolution

Artificial Intelligence (AI) is everywhere these days, and it's changing how we live and work. From helping us find the best routes

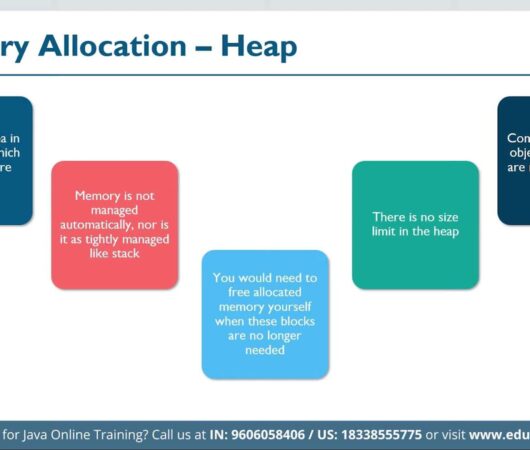

Demystifying Java Virtual Machine Memory Allocation

When you engage in Java programming, the performance of your application becomes intimately tied to the efficient management of memory, especially when

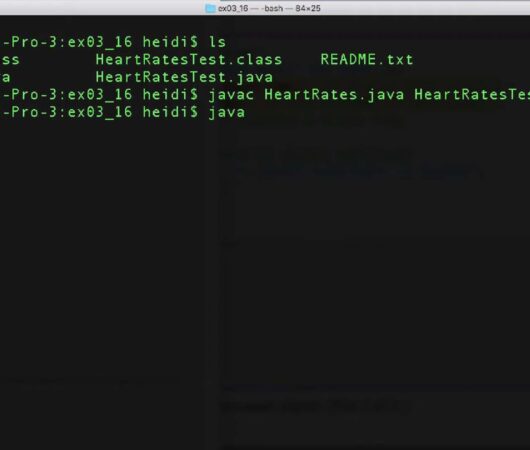

Efficient Handling of Multiple Java Files

Within the dynamic landscape of data administration, the core pillar lies in the realm of storage solutions, which play an indispensable role

JDK Tools: Mastering Troubleshooting Techniques in Java

Java server applications are the backbone of modern web services and enterprise systems, powering everything from online banking to social media platforms.

Java 8 Strings: Efficient String Handling Techniques

In the ever-evolving realm of Java programming, Java 8 has introduced remarkable enhancements, especially in the domain of strings. String manipulation, an

GC Profiler: Unearthing Java Memory Optimization Techniques

In the fast-paced world of software development, every millisecond counts. Whether you're building a high-performance web application or a data-intensive backend system,

Map Reduce Design Patterns Algorithm: Craft Efficient Data

In the ever-evolving landscape of data processing, MapReduce has emerged as a robust paradigm for efficiently handling vast amounts of data. "MapReduce

ArrayDeque vs ArrayList: Analysis and Use Cases in Java

In the world of Java programming, optimizing memory consumption is crucial for building efficient and responsive applications. As developers, we often find

Converting Strings to Any Other Objects in Java

Java is renowned for its flexibility in handling various data types and objects. One of the essential skills every Java programmer should

ArrayList in Java: Unleashing the Power of Dynamic Arrays

In the realm of Java programming, optimizing data structures is essential to ensure that your applications run efficiently. One such versatile data