The Java `hashCode` method is a fundamental aspect of object-oriented programming, especially when dealing with data structures like HashMaps and HashSet. It plays a crucial role in determining how objects are stored and retrieved in these collections. However, the default `hashCode` implementation may not always be the most efficient for your specific use cases. In this article, we’ll explore the significance of the `hashCode` method, common performance issues, and techniques to tune it for better performance.

Understanding the `hashCode` Method

Before delving into performance optimization, let’s understand what the `hashCode` method is and why it’s important. In Java, every object has a `hashCode` method, which returns a 32-bit signed integer representing the object’s value. This value is used by hash-based data structures to quickly locate objects in a collection.

Common Performance Issues

Many developers rely on the default `hashCode` implementation provided by Java’s `Object` class. While this works in many scenarios, it can lead to performance issues in specific cases. Some common problems include hash collisions and inefficient distribution of hash codes.

- Hash Collisions: When two different objects produce the same hash code, a hash collision occurs. This can slow down the retrieval of objects in a hash-based collection;

- Inefficient Distribution: The default `hashCode` method may not distribute hash codes evenly across the range of possible values, leading to inefficient storage and retrieval.

Techniques for Performance Tuning

To overcome these performance issues, you can implement your own `hashCode` method or use libraries that provide enhanced hashing algorithms. Here are some techniques to consider:

- Custom `hashCode` Implementation: Create a custom `hashCode` method for your classes that takes into account the object’s fields and ensures a more even distribution of hash codes;

- Using Libraries: Utilize third-party libraries like Guava’s `Hashing` or Apache Commons `HashCodeBuilder` to generate improved hash codes;

- Consistent Hashing: Implement consistent hashing techniques to ensure that objects are evenly distributed across a hash-based collection, reducing collisions;

- Caching: Consider caching hash codes for immutable objects, as the hash code remains constant throughout the object’s lifetime.

The Impact of Hashing in Data Structures

Hashing is a fundamental concept in computer science and plays a critical role in data structures beyond just HashMaps and HashSets. Understanding how hashing works and its importance in various data structures can provide valuable insights into optimizing the `hashCode` method.

- Hash Tables: Hash tables are widely used data structures that rely on hashing for fast data retrieval. A well-tuned `hashCode` method can significantly improve the performance of hash tables;

- Bloom Filters: Bloom filters use hashing to determine set membership efficiently. Optimizing hash codes can reduce false positives and improve the accuracy of Bloom filters;

- Cryptographic Applications: Hash functions are essential in cryptography for creating digital signatures, password hashing, and more. While these applications have different requirements than standard hashing, they highlight the versatility and significance of hashing in computing.

Performance vs. Security Trade-offs

While optimizing the `hashCode` method for performance is crucial, it’s essential to consider potential security implications. In some cases, improving performance might inadvertently weaken security, especially in cryptographic applications.

- Collision Resistance: Cryptographically secure hash functions prioritize collision resistance to prevent attackers from finding two different inputs that produce the same hash value. In contrast, performance-oriented hash functions may not offer the same level of collision resistance;

- Preimage Resistance: Cryptographic hash functions are designed to resist reverse engineering, making it computationally infeasible to find the input that produced a specific hash value. Performance-focused hash functions may not provide the same level of preimage resistance;

- Security Standards: When working with security-sensitive applications, it’s crucial to adhere to established security standards and use hash functions that meet those standards.

The Evolution of HashCode in Java

To appreciate the significance of optimizing the `hashCode` method, it’s helpful to consider the evolution of this method within the Java ecosystem. In earlier versions of Java (prior to Java 7), the default `hashCode` implementation was simplistic, often relying on the object’s memory address or identity hash code. While this approach had its advantages in terms of simplicity, it also had limitations in terms of performance and predictability.

Java 7 introduced a significant improvement by combining the object’s identity hash code with a randomly generated number, known as the “xorshift” algorithm. This change aimed to reduce collisions and improve hash code distribution. However, it didn’t address all possible scenarios and couldn’t account for the specific characteristics of user-defined classes.

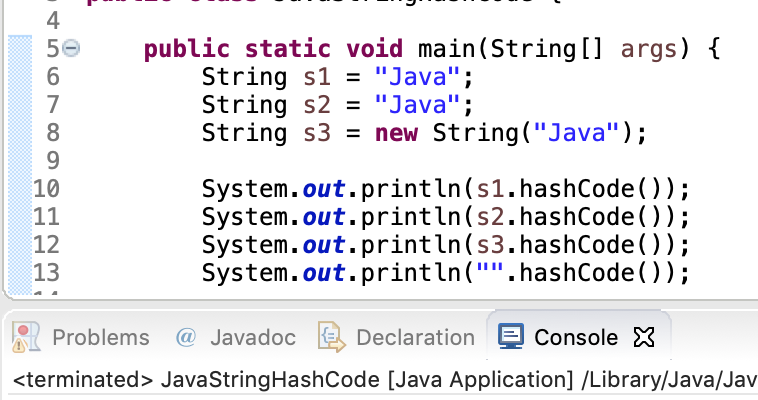

Java 8 and later versions continued to enhance the `hashCode` implementation. For instance, the `hashCode` method for strings was optimized to generate better hash codes based on the string’s contents. These improvements reflect Java’s commitment to optimizing performance across its standard library.

Performance Tuning for Specific Use Cases

The need for `hashCode` optimization can vary significantly depending on the nature of your application and the types of objects you work with. Here are some specific use cases where performance tuning of `hashCode` can be particularly beneficial:

- Large Datasets: When dealing with large datasets, optimizing the `hashCode` method can lead to substantial performance gains. By reducing collisions and improving distribution, you can significantly speed up data retrieval;

- Real-time Systems: In real-time systems, every millisecond counts. Enhancing the `hashCode` method can ensure that your application responds quickly to incoming data and requests, crucial for systems like gaming or financial trading;

- Distributed Systems: Distributed systems often rely on hash-based algorithms to distribute data across nodes. An optimized `hashCode` method can lead to more balanced data distribution and reduce network overhead;

- Caching: Caching is a common optimization technique. By caching hash codes for immutable objects, you can further improve lookup times and reduce the computational overhead of calculating hash codes repeatedly;

- Security Applications: In security-sensitive applications, custom hash code implementations might be necessary to meet specific cryptographic requirements. These implementations prioritize security over raw performance.

Maintaining Compatibility

While optimizing the `hashCode` method can bring significant performance benefits, it’s essential to ensure backward compatibility with existing code. If your classes are used in hash-based collections by other developers, changing the `hashCode` behavior could lead to unexpected issues. Therefore, when implementing custom `hashCode` methods, document the changes clearly and consider versioning to avoid breaking existing code.

Optimizing the `hashCode` method is a valuable practice for Java developers looking to enhance the performance of their applications. It’s a nuanced process that requires a deep understanding of the specific use cases and potential trade-offs, especially in security-sensitive contexts. By carefully implementing custom hash codes, leveraging libraries, and considering the broader context of hashing in computer science, developers can strike the right balance between performance and reliability in their Java applications.

Conclusion

In summary, the `hashCode` method is a critical component of Java’s hash-based data structures. By understanding its importance and addressing common performance issues through custom implementations or libraries, you can significantly enhance the efficiency of your applications. However, it’s essential to strike a balance between performance optimization and security, especially in cryptographic contexts. Remember that performance tuning should be done judiciously, considering the specific requirements of your project.

By following these guidelines, you can ensure that your Java applications perform optimally when using hash-based collections and understand the broader implications of hashing in computer science.