In many Java applications, generating random numbers is a crucial aspect. Whether you’re working on a game, a simulator, or any other application where randomness is required, choosing the right tool for the job can significantly impact performance and results.

In the world of Java, two classes are commonly used for this purpose – ThreadLocalRandom and Random. In this comprehensive guide, we’ll explore the differences between these two classes, their operational characteristics, and when to use each of them.

ThreadLocalRandom

ThreadLocalRandom is a class introduced in Java 7, providing a convenient way to generate random numbers in a multithreaded environment. It belongs to the java.util.concurrent package and is specifically designed for use in parallel applications. Here are some key features and advantages of ThreadLocalRandom:

- Thread Safety: One of the most significant advantages of ThreadLocalRandom is its thread safety. When multiple threads simultaneously generate random numbers using a single Random instance, it can lead to delays and synchronization overhead. ThreadLocalRandom addresses this issue by providing each thread with its independent random number generator. This ensures that each thread can generate random numbers without blocking other threads, thus improving performance in multithreaded scenarios;

- Performance Boost: Besides enhancing thread safety, ThreadLocalRandom often outperforms the traditional Random class in multithreaded applications. The absence of contention between threads allows for more efficient parallel random number generation. If your application heavily relies on parallelism, using ThreadLocalRandom can significantly enhance performance;

- Predictable Seed Generation: ThreadLocalRandom generates seeds independently, using a combination of thread-specific and shared seeds. This seed generation strategy is designed to produce predictable, reproducible sequences of random numbers for each thread. While predictability isn’t always desirable, it can be useful for debugging and testing purposes;

- Ease of Use: ThreadLocalRandom offers a simple and convenient API for generating random numbers. With straightforward method calls, you can obtain random integers, longs, doubles, and other data types. It’s a reliable choice for scenarios where thread safety and performance are critical.

Random

The Random class has been part of the Java standard library since its early versions. It provides a basic random number generator suitable for many applications. Here are some characteristics of the Random class:

- Simplicity: Random is easy to use and requires minimal configuration. You create an instance of Random and call its various methods to generate random numbers of different types. It’s a good choice for straightforward use cases where multithreading is not a concern;

- Thread Safety Issues: One of the primary drawbacks of the Random class is its lack of thread safety. If you intend to use a single Random instance across multiple threads, manual synchronization is required to prevent data corruption and unexpected behavior. The synchronization overhead can impact performance in multithreaded applications;

- Predictable Seed Generation: By default, Random generates seeds using the current system time in milliseconds. This means that if you create multiple Random instances quickly, they might have the same seeds and produce similar sequences of random numbers. In applications where true randomness and unpredictability are crucial, this behavior can be limiting;

- Limited Parallelism Support: Due to its lack of thread safety requirements and synchronization, using Random in multithreaded applications can be challenging. While these issues can be worked around with explicit synchronization, it may not be the most efficient choice for parallel scenarios.

ThreadLocalRandom vs. Random: Comparison Table

| Aspect | ThreadLocalRandom | Random |

|---|---|---|

| Thread Safety | Provides thread safety with independent generators for each thread, eliminating contention and synchronization issues in multi-threaded applications. | Lacks inherent thread safety, requiring manual synchronization in multi-threaded scenarios, potentially impacting performance. |

| Performance | Offers improved performance in multi-threaded environments due to reduced contention among threads, making it a suitable choice for concurrent applications. | May experience performance degradation in multi-threaded scenarios due to synchronization overhead. More suitable for single-threaded applications. |

| Seed Generation | Employs a seed generation strategy that combines thread-specific and shared seeds, ensuring predictable and reproducible sequences for each thread. Useful for debugging and testing. | Relies on the system time in milliseconds for seed generation by default, potentially leading to similar sequences when multiple instances are created quickly. May not offer the same predictability as ThreadLocalRandom. |

| Usability | Provides a straightforward API for generating random numbers, making it easy to use and integrate into applications. | Offers simplicity and ease of use, making it suitable for basic random number generation tasks in single-threaded applications. |

| Use Cases | Ideal for multi-threaded applications where thread safety and performance are paramount, as well as scenarios requiring predictable sequences for debugging. | Suited for single-threaded applications or quick random number generation tasks, and may be preferred for compatibility with existing code. |

Comparing these aspects will help you make an informed decision about whether to use ThreadLocalRandom or Random based on the specific requirements of your Java application.

When to Use ThreadLocalRandom or Random

The choice between ThreadLocalRandom and Random depends on the requirements and characteristics of your Java application. Here’s a brief guide on when to use each class:

Use ThreadLocalRandom when:

- Multithreaded Applications: If your application involves multiple threads simultaneously generating random numbers, it’s preferable to use ThreadLocalRandom. In such scenarios, it ensures thread safety and high performance;

- Predictable Sequences: When you need predictable and reproducible sequences of random numbers for debugging or testing purposes, ThreadLocalRandom’s seed generation strategy makes it a suitable choice.

Use Random when:

- Single-Threaded Applications: In single-threaded applications where thread safety is not a concern, Random can be a simple and lightweight choice for generating random numbers;

- Quick Randomness: If you need fast, one-off random numbers without the overhead of creating and managing multiple instances, the simplicity of Random can be useful;

- Compatibility: If you’re working with legacy code that already uses Random or have specific requirements for seed generation control, Random can be a pragmatic choice.

Following these recommendations will allow you to select the right class for generating random numbers in your Java application, ensuring optimal performance and behavior according to your project’s needs.

Performance Comparison

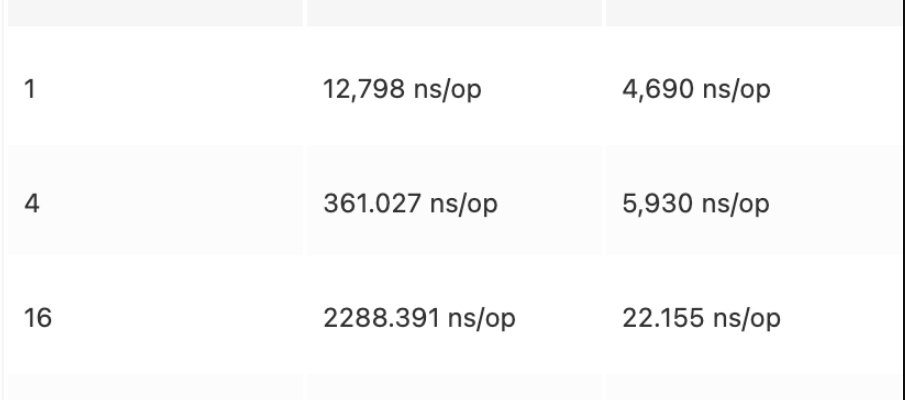

To understand how the choice between ThreadLocalRandom and Random affects performance, let’s consider a benchmark example.

Imagine you have a multithreaded application that requires the simultaneous generation of a large number of random integers. You decide to compare the performance of ThreadLocalRandom and a shared Random instance. Here’s what you might observe:

import java.util.Random;

import java.util.concurrent.ThreadLocalRandom;

public class RandomPerformanceComparison {

private static final int NUM_THREADS = 4;

private static final int NUM_ITERATIONS = 1000000;

public static void main(String[] args) throws InterruptedException {

// Using ThreadLocalRandom

long startTime = System.currentTimeMillis();

Thread[] threads = new Thread[NUM_THREADS];

for (int i = 0; i < NUM_THREADS; i++) {

threads[i] = new Thread(() -> {

for (int j = 0; j < NUM_ITERATIONS; j++) {

int randomInt = ThreadLocalRandom.current().nextInt();

}

});

threads[i].start();

}

for (Thread thread : threads) {

thread.join();

}

long endTime = System.currentTimeMillis();

System.out.println("ThreadLocalRandom time: " + (endTime - startTime) + " ms");

// Using a shared Random instance

startTime = System.currentTimeMillis();

Random sharedRandom = new Random();

threads = new Thread[NUM_THREADS];

for (int i = 0; i < NUM_THREADS; i++) {

threads[i] = new Thread(() -> {

for (int j = 0; j < NUM_ITERATIONS; j++) {

int randomInt = sharedRandom.nextInt();

}

});

threads[i].start();

}

for (Thread thread : threads) {

thread.join();

}

endTime = System.currentTimeMillis();

System.out.println("Shared Random time: " + (endTime - startTime) + " ms");

}

}In this benchmark, we create four threads, each generating one million random integers. We measure the time it takes to complete this task using ThreadLocalRandom and a shared Random instance.

In most cases, in multithreaded scenarios, ThreadLocalRandom significantly outperforms the shared Random instance. This is because ThreadLocalRandom avoids synchronization overhead and contention between threads.

Conclusion

In the world of Java, choosing the right tool for random number generation can significantly impact performance, predictability, and overall application functionality. ThreadLocalRandom and Random are two primary contenders, each with its set of strengths and weaknesses.

ThreadLocalRandom, designed with multithreaded environments in mind, excels in cases where thread safety and thread performance are critical. Its ability to provide each thread with an independent random number generator eliminates contention and boosts parallelism. Moreover, its predictable seed generation can be a valuable asset for debugging and testing.

On the other hand, Random performs well in single-threaded scenarios where simplicity and ease of use take precedence. Due to its lightweight nature, it is suitable for quick random number generation tasks. Additionally, if you are dealing with legacy code or require precise control over seed generation, Random can be a pragmatic choice.

Ultimately, the choice between ThreadLocalRandom and Random should align with the specific project requirements. Whether it’s the need for thread safety, predictability, or ease of use, both classes offer valuable tools for random number generation in Java. Understanding their differences and making a well-informed choice can ensure that randomness in Java applications is effectively utilized for its intended purpose.