When it comes to writing efficient and memory-conscious Java code, understanding how different data types consume memory is crucial. In this comprehensive guide, we’ll delve into the memory consumption of various Java data types, helping you make informed decisions when designing your applications.

Java’s flexibility and power come with the responsibility of managing memory effectively. Each data type in Java consumes a specific amount of memory, and making the right choices can significantly impact the performance and efficiency of your software. Primitive data types like `byte`, `int`, and `float` have fixed memory footprints and are more memory-efficient compared to their object counterparts. By choosing these wisely, you can reduce memory overhead.

Objects, on the other hand, have memory overhead due to object headers, references, and padding. Understanding how to minimize this overhead is crucial for optimizing memory usage.

Arrays are collections of data elements that can vary in size. Their memory consumption depends on the data type they hold and the number of elements. Efficiently managing arrays is key to preventing excessive memory allocation. Strings in Java are represented as arrays of characters (`char`). The memory usage of strings varies based on their length and character encoding. Handling strings efficiently is vital, especially in applications with extensive text processing.

By grasping how different Java data types consume memory, you can make informed decisions during your software design and development process. This knowledge empowers you to write code that not only performs well but also uses system resources efficiently, ensuring your Java applications are both responsive and economical.

Why Does Memory Consumption Matter?

Memory consumption is crucial in software development. It impacts performance, scalability, and costs. Efficient memory usage makes applications faster, more responsive, and cost-effective. It optimizes resource utilization and enhances the user experience. Prioritizing memory management is fundamental for successful software projects.

1. Performance Optimization

Efficient memory usage is a cornerstone of high-performance Java applications. When your code utilizes memory resources judiciously, it leads to noticeable improvements in execution speed and overall responsiveness. This optimization is particularly critical for applications where speed is of the essence, such as real-time systems, games, or financial applications.

By minimizing memory overhead, your Java applications can make better use of available resources. This not only results in quicker response times but also reduces the risk of memory-related bottlenecks, ensuring that your software can handle larger workloads without slowing down. In essence, optimizing memory usage goes hand-in-hand with achieving peak performance.

2. Scalability

Scalability is a fundamental requirement for modern software applications. Memory-efficient code plays a pivotal role in building scalable Java applications. As your user base and data volumes grow, excessive memory consumption can become a significant hindrance.

When you design your Java application with memory efficiency in mind, it becomes more adaptable to increasing loads. This scalability ensures that your application can handle a growing number of concurrent users or larger datasets without experiencing degradation in performance. In essence, memory-efficient code lays the foundation for a more elastic and responsive system.

3. Cost Efficiency

In cloud-based environments, every byte of memory you consume directly impacts your operational costs. Cloud service providers often charge based on the resources you use, including memory. Therefore, lower memory usage translates directly into lower infrastructure costs. Optimizing memory consumption not only saves money but also contributes to a more sustainable and environmentally friendly operation. By minimizing the memory footprint of your Java applications, you reduce the resources required to host and run them, aligning with cost-conscious and environmentally responsible practices.

Optimizing memory consumption in Java is not just a technical consideration; it’s a strategic decision that can significantly enhance performance, scalability, and cost-efficiency. By carefully managing memory resources, you can create software that not only excels in execution but also offers economic advantages in resource-intensive cloud environments.

Java Data Types and Their Memory Consumption

Let’s explore the memory consumption of some common Java data types:

1. Primitive Data Types

a. `byte` (8 bits)

The `byte` data type consumes 1 byte of memory. It’s suitable for storing small integers within the range of -128 to 127.

b. `int` (32 bits)

The `int` data type uses 4 bytes of memory and can represent a wide range of integers.

c. `float` (32 bits)

Floating-point numbers, such as `float`, also use 4 bytes of memory.

2. Reference Data Types

a. Objects

Objects in Java consume memory based on their fields and methods. The memory overhead includes object headers, references, and padding.

3. Arrays

Arrays are collections of data elements. Their memory consumption depends on the data type they hold and the number of elements.

4. Strings

Strings in Java are represented as arrays of characters (`char`). The memory usage varies based on the length of the string and the character encoding.

Strategies for Memory Optimization

1. Use Primitive Types Wisely

Opting for primitive data types such as `byte`, `int`, and `float` can significantly contribute to memory efficiency in your Java applications. These data types consume less memory compared to their object counterparts. For instance, using `int` instead of `Integer` saves memory by avoiding the overhead of an object.

However, it’s essential to balance memory savings with code readability and maintainability. Choose primitive types when appropriate, especially for variables that won’t require null values or complex operations. Still, remember that object types offer benefits like nullability and can be more expressive in certain situations.

2. Minimize Object Creation

Excessive object creation can lead to unnecessary memory consumption and negatively impact performance. To optimize memory, strive to minimize the creation of temporary or short-lived objects. Consider implementing object pooling mechanisms or reusing objects to reduce the overhead associated with creating new instances.

Reuse objects in scenarios where it makes sense, but exercise caution to ensure thread safety and proper management of pooled objects. This approach not only conserves memory but also helps decrease the workload on the garbage collector, improving overall application performance.

3. Efficiently Manage Collections

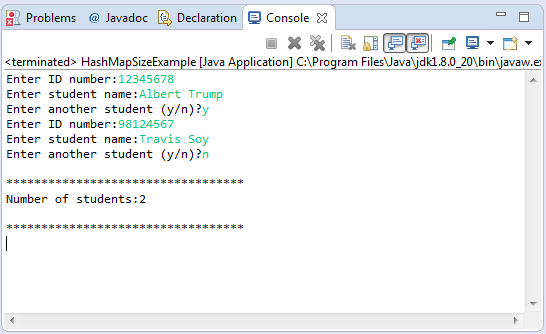

Java’s collection classes like `ArrayList` and `HashMap` are powerful tools for managing data. However, inefficient use of collections can lead to excessive memory allocation. To optimize memory usage, specify an appropriate initial capacity when creating these collections. This prevents frequent resizing operations, which can be memory-intensive.

Additionally, consider using specialized collections from the `java.util.concurrent` package when building multi-threaded applications. These collections are optimized for concurrent access and can help reduce memory contention and fragmentation.

4. Be Mindful of String Operations

String manipulation can be a common source of memory inefficiency due to the creation of multiple intermediate string objects. To optimize memory usage in string operations, use the `StringBuilder` class. `StringBuilder` allows you to efficiently concatenate and modify strings without creating unnecessary intermediate objects.

By following these memory optimization strategies, you can strike a balance between memory efficiency and code maintainability, resulting in Java applications that are not only performant but also resource-conscious. Remember that each application may have unique requirements, so adapt these strategies to suit your specific use cases while keeping memory efficiency at the forefront of your development efforts.

Conclusion

Memory consumption is a critical consideration in Java development. By understanding how different data types affect memory usage and implementing memory optimization strategies, you can build more efficient and cost-effective Java applications. Keep these principles in mind as you design and develop your software to achieve optimal performance and scalability. Remember, efficient memory management is not just a technical concern but also a strategic advantage for your projects.